5 Comparison of coding algorithms

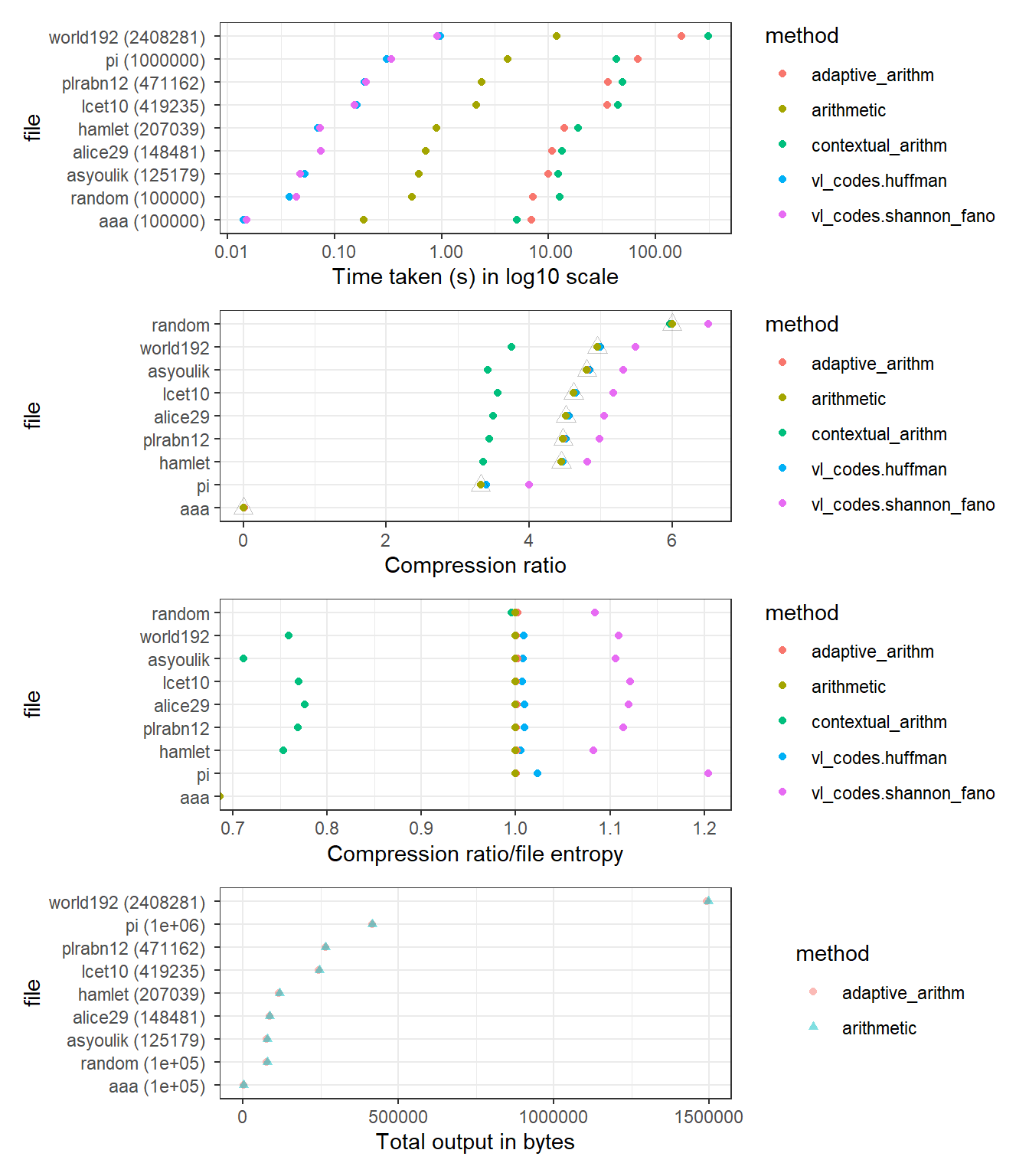

Figure 5.1: Comparison of compression algorithms (a): comparison of time used

5.0.1 Computation time

In Figure 5.1 a, the contextual arithmetic and adaptive arithmetic algorithms took the longest time to finish for files of various lengths and types. Arithmetic coding had a medium rate while Huffman and Shanno-Fano coding were the fastest. There was no observable pattern regarding which file contextual arithmetic perform slower than adaptive arithmetic, as the two files that adaptive arithmetic performs faster are world192 and aaa, two very distinct files. The discussions in 3.3.2 suggest the none of the files are too large for parsing files twice to be the computational bottleneck.

5.0.2 Compression ratio

In Figure 5.1 b and c, it is observed that contextual arithmetic coding had the lowest compression rate and Shannon-Fano had the highest for all English files. The high performance of contextual coding on English files was expected because English was not i.i.d, as discussed earlier. This advantage diminished at random, pi and aaa, which were random files or artificial files that all encoders performed well.

Except aaa, the compresssion rates are similar across all files. The file with highest compression rate was random but this distinction no longer existed when compression rate was normalized by entropy. This indicated the importance of normalizing compression rate by entropy.

5.0.3 Total compressor output

The theory in 3.3.1 expected that, when adding the size of the probability distribution (in bytes) to the size of compression output of the arithmetic encoder and compare that with the size of the output of the adaptive arithmetic encoder, we would expect situations where the adaptive arithmetic encoder performs worse than the arithmetic encoder because it did not converge to source entropy, and other situations where the adaptive encoder performs better because its output discount the probability distribution. However, in Figure d, it was observed that both adaptive and static arithmetic encoder give similar total output in bytes.

Further experiments were done on Hamlet and world192, where the adaptive encoder had lower total ouptut intially. It was hypothesized that by reducing the size of source iteratively, there would be a cross-over point where the static encoder performs better than the adaptive encoder because the adaptive encoder fails to converge. However, this was not observed, either. This curious result would constitute important future work where a more comprehensive study including variables such as \(\delta\).